This is perhaps obvious, but there's more to a normal classification system than the mere fact that it technically can be operated to classify.

Classification systems and policies

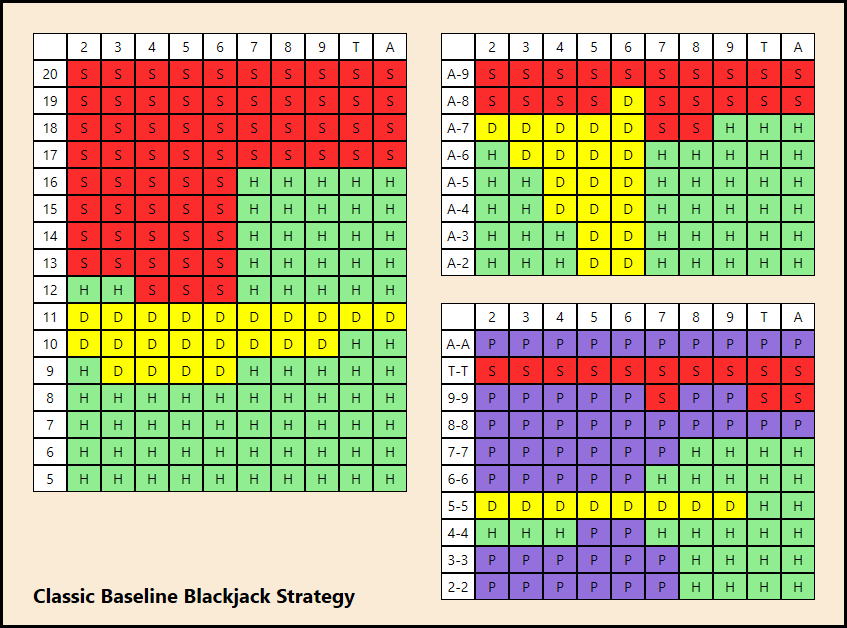

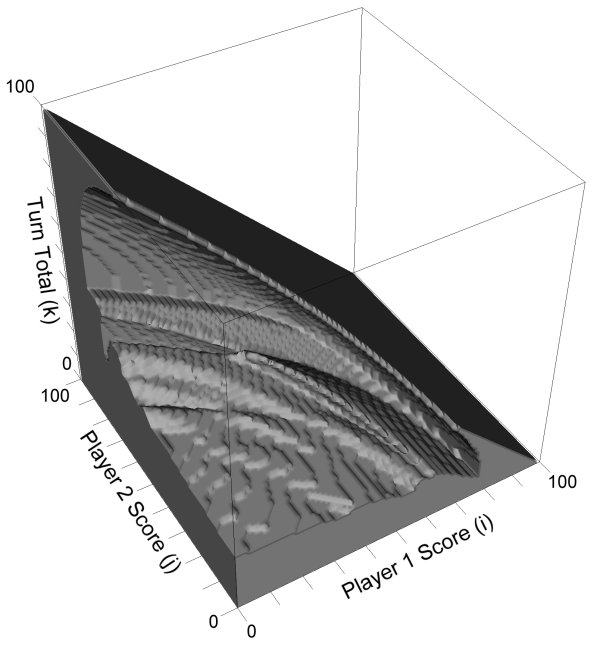

What do I mean by a classification system? Let's look at concrete examples. Concrete examples are generally examples of both classification systems and policies. This is a diagram (which was taken from a mildly interesting blog post called "Winning Blackjack using Machine Learning: A Practical Example of a Genetic Algorithm" by Greg Sommerville) that describes the classic strategy for how to play blackjack.

To decide what to do, you figure out where you are on this chart, and then the letter (and color) of that chart dictates what action to take. The left table is for hard hands, the table in the upper right is for soft hands, and the table in the lower right is for pairs.

This is an example of both a policy and a classification system. If you shuffled the letters, you would instead have a different policy, but the same classification system.

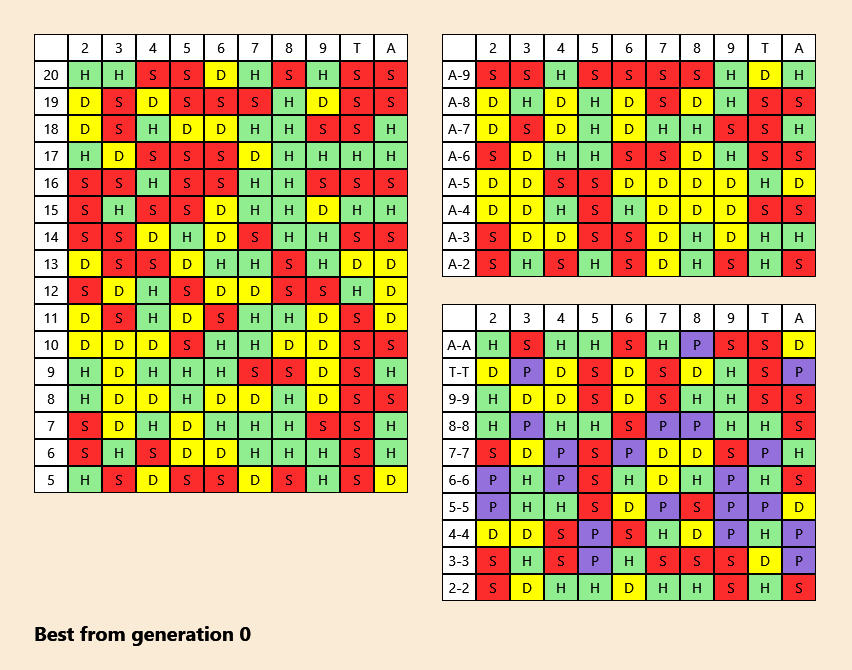

Another example is the dice game Pig. There are three things that you should be paying attention to while playing Pig: player 1 total score, player 2 total score, and the current turn total. That is a 3 dimensional, 100 by 100 by 100 grid, and that's the classification system. Then for each cell in that grid, there's a best move, either roll or hold. The optimal policy turns out to be a corrugated shape. There's a paper "Practical Play of the Dice Game Pig" by Neller and Presser, and also Numberphile made a video about it.

A third example is underactuated swing-up of an Acrobot The classification system of the Acrobot is the state space, which has two dimensions of (angular) positions and two dimensions of (angular) momentums. The policy is a function from points in the state space to torques, and a good policy can lead to swing-up.

Aside: To someone who is familiar with production systems, it might make sense to say that a classification system is a set of patterns of some production system. A production system is, roughly speaking, a set of "if PATTERN then ACTION" rules. H. A. Simon and Allen Newell suggested production systems as models of human intelligence and also a possible route to artificial intelligence, and if you want to learn more about them, you might look into the (historical) OPS5 and the (still active) ACT-R and Soar cognitive architectures.

Returning to the blackjack example and rephrasing it as a set of "if PATTERN then ACTION" rules might contain rules like this:

- Soft 20 (A,9) always stands.

- Soft 19 (A,8) doubles against dealer 6, otherwise stand.

Some funny classification systems

"The Analytical Language of John Wilkins" by Borges is a short essay, that contains this excerpt:

"These ambiguities, redundancies and deficiencies recall those that Dr. Franz Kuhn attributes to a certain Chinese dictionary entitled The Celestial Emporium of Benevolent Knowledge. In its remote pages it is written that animals can be divided into (a) those belonging to the Emperor, (b) those that are embalmed, (c) those that are tame, (d) pigs, (e) sirens, (f) imaginary animals, (g) wild dogs, (h) those included in this classification, (i) those that are crazy-acting (j), those that are uncountable (k) those painted with the finest brush made of camel hair, (l) miscellaneous, (m) those which have just broken a vase, and (n) those which, from a distance, look like flies.

Another funny classification system is this video "that's right, it goes to the square hole"

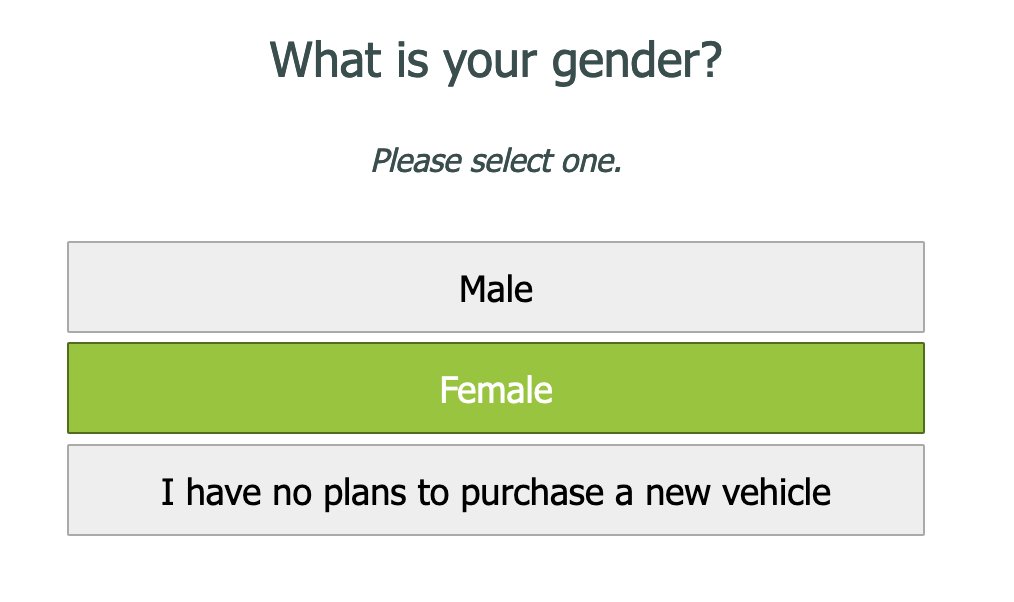

A third funny classification system is "Ah yes, the three genders":

I bring together these three funny examples because I want to show that there's more to being a good or normal classification system than the mere fact that it technically can be operated to classify.

Why "Mutually Exclusive and Collectively Exhaustive" isn't sufficient

Someone might say that the reason these funny systems are funny is that they violate a criterion regarding the goodness of a partition: A partition should be mutually exclusive (no overlaps) and collectively exhaustive (no gaps).

Aside: I know this criterion was popularized by Barbara Minto, as "MECE", which is pronounced rhyming with "grease". I don't know Minto's MECE very well; I am focusing on the narrow meaning of the MECE phrase here, not the whole Minto system.

You can always patch a funny classification system to technically comply with MECE.

If one pattern overlaps another pattern, repair it by ordering the patterns - this ordering might be called "priority" - and "punching a hole" in the lower-priority pattern. For example, "X is a square" and "X is a rectangle" are concepts that overlap. Ordering them might mean putting "X is a square" at a higher priority than "X is a rectangle", and "punch a hole" means to redefine "X is a rectangle" to include an "and not" clause, like "X is a rectangle and X is not a square". Now the patterns do not overlap. If a pattern does not exactly meet another pattern, repair it by adding a "misc" pattern at the bottom of the order that matches everything not matched by any other pattern.

However, if you try to apply this patch strategy to the funny classification systems I mentioned before, you get funny MECE classification systems. So saying "this violates MECE" isn't sufficient to explain why the funny classification systems are funny.

Why "Natural Kinds" isn't sufficient

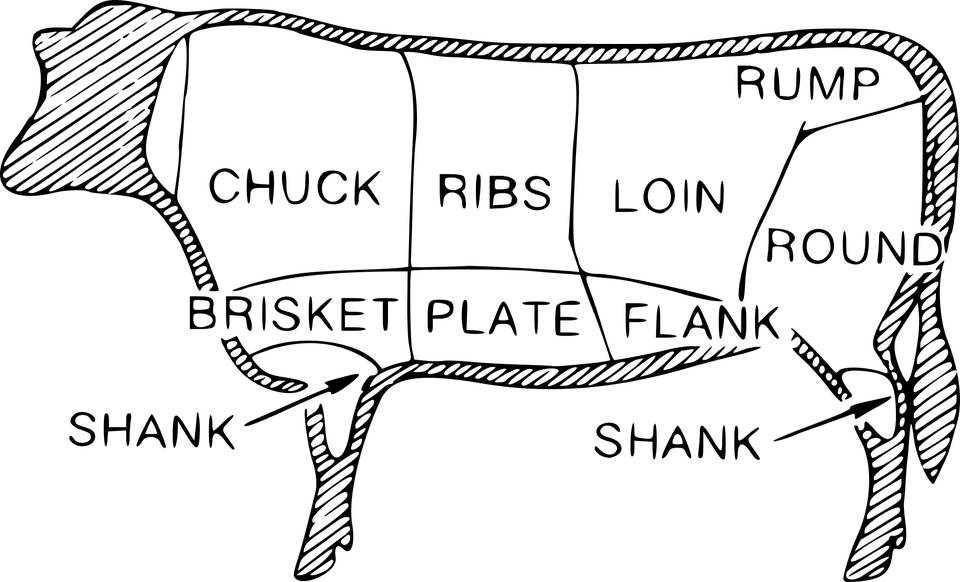

There's an old analogy, from Plato's Phaedrus, that philosophers are like butchers, and that a good classification carves Nature at the joints. Maybe the problem with the funny examples of classification systems is that they violate this recommendation, and are breaking something like an unskilled butcher cutting through a bone.

This is a reasonable objection, and it's definitely true that the Celestial Emporium of Benevolent Knowledge does not "carve Nature at the joints". It's difficult to say that any system definitely does, but the Celestial Emporium definitely does not - I think Borges deliberately designed it that way. However, there are pragmatic, unfunny, classification systems. For example, a log-splitter splits a log into reasonable pieces even though trees do not have joints.

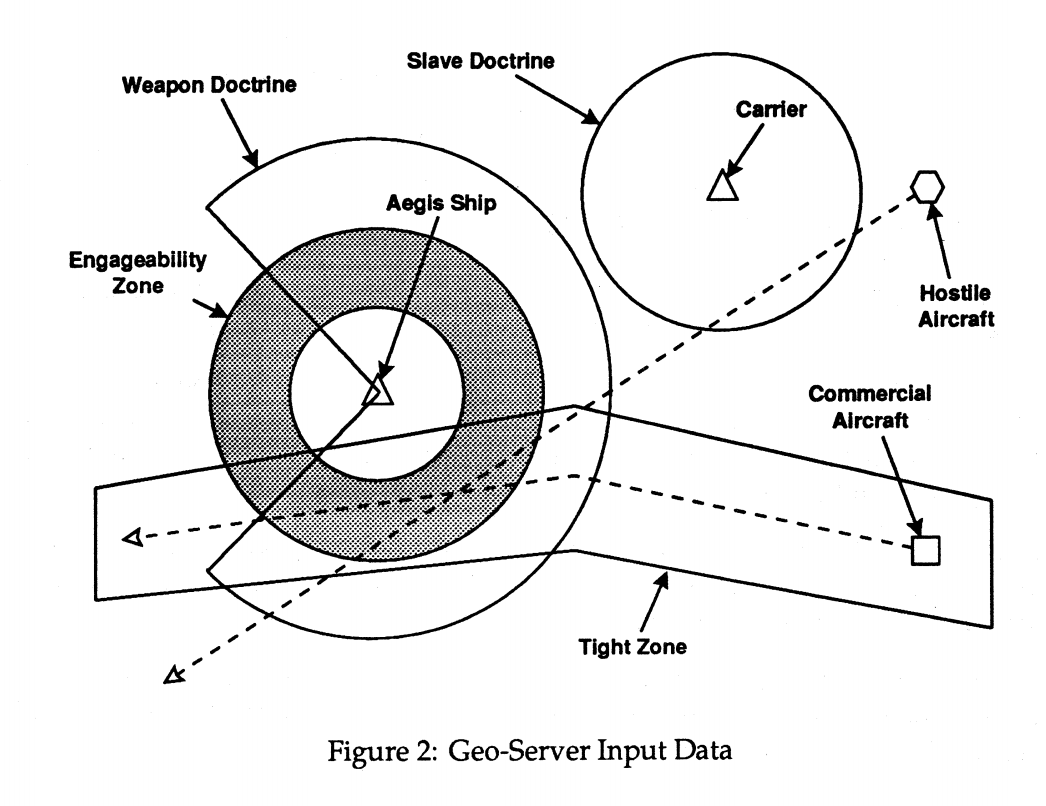

There was an experiment, the 1993 ARPA/ONR/NSWC experiment evaluating prototyping languages by assigning them to develop a component called a geo-server. (Described in Hudak and Jones, Research Report YALEU/DCS/RR-1049 1994). The geo-server's main task is to determine the presence of friendly and hostile aircraft in various regions called engageability zones, weapon doctrines, and tight zones.

Aside: I encountered this paper when I was first learning Haskell, and had a very strong emotional reaction to it. There's a contrast between, on the one hand the gentle and playful "Haskell School of Expression", which assembles pictures out of functions, and on the other hand, the very direct military application described in this research report RR1049. They are not just two texts that were written by the same author (Paul Hudak) using the same programming language (Haskell). The two pieces of software are written with exactly the same clear, algebraic style and progressive, incremental enhancement of the system's functionality. It was as if I found that my favorite pastry chef also cooked anthrax, and used not just the same kitchen but the same pots and pans to do it. I felt both betrayed and nauseated.

Dividing the ocean into these zones is deeply pragmatic; nobody would claim that this partition of the ocean is discovering and correctly capturing different "natural kinds" of ocean.

And yet, the log-splitter or the geo-servers, despite violating the natural kinds criterion, are unfunny. So there is something about the funny systems which is not captured by "Natural Kinds".

The Tick's Umwelt

Jakob Johann von Uexküll wrote about what the world looks like to a tick. In "The Open: Man and Animal" Georgio Agamben wrote about, and excerpted, Uexküll.

Following Uexküll's indications, let us try to imagine the tick suspended in her bush on a nice summer day, immersed in the sunlight and surrounded on all sides by the colors and smells of wildflowers, by the buzzing of the bees and other insects, by the birds' singing. But here, the idyll is already over, because the tick perceives absolutely none of it.

This eyeless animal finds the way to her watchpost with the help of only her skin's general sensitivity to light. The approach of her prey becomes apparent to this blind and deaf bandit only through her sense of smell. The odor of butyric acid, which emanates from the sebaceous follicles of all mammals, works on the tick as a signal that causes her to abandon her post and fall blindly downward toward her prey. If she is fortunate enough to fall on something warm (which she perceives by means of an organ sensible to a precise temperature) then she has attained her prey, the warm-blooded animal, and thereafter needs only the help of her sense of touch to find the least hairy spot possible and embed herself up to her head in the cutaneous tissue of her prey. She can now slowly suck up a stream of warm blood.

At this point, one might reasonably expect that the tick loves the taste of the blood, or that she at least possesses a sense to perceive its flavor. But it is not so. Uexküll informs us that laboratory experiments conducted using artificial membranes filled with all types of liquid show that the tick lacks absolutely all sense of taste; she eagerly absorbs any liquid that has the right temperature, that is, thirty-seven degrees centigrade, corresponding to the blood temperature of mammals. However that may be, the tick's feast of blood is also her funeral banquet, for now there is nothing left for her to do but fall to the ground, deposit her eggs and die.

The example of the tick clearly shows the general structure of the environment proper to all animals. In this particular case, the Umwelt is reduced to only three carriers of significance or Merkmalträger: (1) the odor of the butyric acid contained in the sweat of all mammals; (2) the temperature of thirty-seven degrees corresponding to that of the blood of mammals; (3) the typology of skin characteristic of mammals, generally having hair and being supplied with blood vessel.

It's an interesting thought experiment, trying to stretch our sense of empathy and ask "what does it feel like to be a tick?", including investigating with actual experiments what can a tick perceive. (Ian Bogost has a book, Alien Phenomenology, which does the same thought experiment with objects.) There's a superficial conclusion here that the world looks and feels different to a tick because the tick has different sense organs, and an only slightly more interesting conclusion that the world feels different to a tick because the tick has different instincts; for example, the odor of sweat is particularly good or delicious to the tick.

Uexküll and Agamben don't mention it in this passage, but to go a little further, the tick evolved to have those senses because they're helpful in the tick's life cycle. The fact that the tick's lifecycle characteristically includes drinking mammal blood, and the fact that the tick's senses are suitable for distinguishing "probably a mammal nearby" from "probably not a mammal" fit tightly together. Natural selection has nestled them together, in its characteristic circular-causation way. That is, the policy (or the purpose behind the policy), acts as a force on the classification system, tightening the classification system over time to more and more accurately carve the distinctions that matter to the policy (or the purpose behind the policy).

What the Frog's Eye Tells the Frog's Brain

It's sometimes difficult to get a sense of why something is paradigm-shifting, because it's difficult to get yourself back to a previous paradigm. "What the Frog's Eye Tells the Frog's Brain" is a highly cited 1959 paper that said:

"Fundamentally, it shows that the eye speaks to the brain in a language already highly organized and interpreted, instead of transmitting some more or less accurate copy of the distribution of light on the receptors."

And, later:

"As a crude analogy, suppose that we have a man watching the clouds and reporting them to a weather station. If he is using a code, and one can see his portion of the sky too, then it is not difficult to find out what he is saying. It is certainly true that he is watching a distribution of light; nevertheless, local variations of light are not the terms in which he speaks nor the terms in which he is best understood."

And, later:

"The operations thus have much more the flavor of perception than of sensation if that distinction has any meaning now. That is to say that the language in which they are best described is the language of complex abstractions from the visual image. We have been tempted, for example, to call the convexity detectors "bug perceivers." Such a fiber responds best when a dark object, smaller than a receptive field, enters that field, stops, and moves about intermittently thereafter."

I don't have a great grasp of the paradigm before Lettvin, Maturana, McCulloch, and Pitts published, but I think it was an inarticulate assumption something like: "Eyes probably have enough to do with receiving light, and the optic nerve probably has enough to do with transmitting information, it's the brain that probably does things like perceiving, recognizing, or interpreting an image as an image of a bug".

Anyway, this is an example of a purpose or policy of eating flies strongly influencing the "codebook" of "what the frog's eye tells the frog's brain".

Agre and Chapman's Deixis

Phil Agre and David Chapman wrote Pengi, an automated player for Pengo, a game where the player pushes sliding blocks of ice.

One of the innovative aspects of Pengi was deictic representation. "Deixis" is used in linguistics regarding words, such as "I", "here", "now" and "there" that have meanings that are in some sense relative to the utterance.

To explain what deictic representation means, these are some counterexamples from Agre and Chapman's Pengi paper:

- "Block#213 is-at position 427, 991"

- "Block#213 is-type Block"

- "Block#213 is-next-to Bee#23"

These are not deictic representation because they do not make reference to the penguin's situation or goals. Instead of names like "Block#213" or "Bee#23", Pengi employs "indexical-functional entities" (ugh, programmers) which are something like noun phrases containing "I" or "me" like this:

- The block I'm pushing

- The corridor I'm running along

- The bee on the other side of this block next to me

- The block that the block I just kicked will collide with

- The bee that is heading along the wall that I'm on the other side of Pengi uses these entities in "aspects" (ugh, naming things) which are something like sentences:

- The block I'm going to kick at the bee is behind me (so I have to backtrack)

- There is no block suited for kicking at the bee (so just hang out until the situation improves)

- I've run into the edge of the screen (better turn and run along it)

- The bee I intend to clobber is closer to the projectile than I am (dangerous!)

- [...] but it's heading away from it (which is OK)

- I'm adjacent to my chosen projectile (so kick it)

It's difficult to see the whole classification system for Pengi from the examples, but it's pretty clear that the corresponding actions, the phrases in the parentheses, have shaped the classification system, the sentences before the phrases. That is, kick is an action in the Pengo domain; kick has prerequisites for reasonableness, such as adjacency, and so adjacency shows up in the classification system.

Acquired Distinctiveness, Seeing Like A State, Sorting Things Out's Torque

Douglas H. Lawrence, a researcher at Stanford, did some research on rats published 1949 and 1950 about "acquired distinctiveness". The idea is "stimuli to which you learn to make a different response become more distinctive and stimuli to which you learn to make the same response become more similar." (The quote is from the wikipedia article on categorical perception.) That is, if you (or a rat) are given a perceptual task, such as discerning a category of "light" greys from another category of "dark" greys, and saying a categorical response, you will with practice gradually get better at it, and also your internal experience of the whole spectrum of greys will shift, with pairs of shades straddling two sides of the boundary you have learned to classify seeming to become more different - and (perhaps necessarily?) the shades within each class seeming to become more similar.

I cannot say this better than the Wikipedia article on Seeing Like A State:

"Seeing Like a State: How Certain Schemes to Improve the Human Condition Have Failed" is a book by James C. Scott critical system of beliefs he calls high modernism, that centers around confidence in the ability to design and operate society in accordance with scientific laws." One of the case studies from Seeing Like a State is "scientific forestry", which viewed forests that have similar total volume of wood as similar, and ignored other differences. This perspective "what purpose does this forest have (to the state)? it produces wood." is what Scott means by the phrase "Seeing Like a State". Scott is particularly interested in the way that taking this perspective seems to make seeing other things more difficult and this blindness leads high modernist schemes like "scientific forestry" to fail.

To be explicit, I am trying to connect Scott's idea of "Seeing Like a State" to Lawrence's idea of "Acquired Similarity". The schemes Scott studied inflicted (on the people operating the scheme) a kind of blindness, blinkeredness and/or inattention due to focusing on a particular angle on the situation. Lawrence mostly talked about "acquired distinctiveness", but "acquired similarity" is implicit in his work.

Aside: I have not read it, but I feel like I have to mention "Sorting Things Out: Classification and Its Consequences" Based on reviews of it, I understand the book calls the harms done by a (state) classification scheme such as "the identification of South Africans during apartheid as European, Asian, colored, or black" to people who in one way or the other are close to the boundaries of the classification system "torque".

Teleology of classification systems and Conclusion

Unfunny classification systems hang together even better than "MECE" forces them to. They hang together with the dynamics of the system and with the actions that they can trigger, too. You can see the fit between the dynamics of Blackjack (totals matter, aces can be valued as 1 or 11) and the classification system including totals, but not which card was dealt first, and the "hard/soft" distinction, but not the count of face cards, which is irrelevant to Blackjack. The path-independence of physics means that position and momentum is sufficient to capture "everything that matters" about the acrobot, and the day of the week is irrelevant.

One lightweight way to capture this "patterns hang together with actions" idea is that production systems routinely self-limit; the consequence of firing a rule, in the dynamics, tends to be that the pattern no longer fits. For example, "If you are east of Broadway, go west" and "If you are south of Houston, go north", the opposite directions in the pattern part and the action part are not random or coincidental, they're characteristic of self-limiting navigation rules. They might be part of a "navigate within Manhattan to Central Park" production system.

A second lightweight way that "patterns hang together with actions" relates to the direct or indirect objects of actions. If the action part of the pattern / action rule is naturally parameterized with a direct or indirect object - approach , obtain - then the pattern part of the rule normally picks out what or actually is, and the pattern does not apply if there is no such entity. For example, in Pengi, "kick" is an action that might be parameterized with both a direct object, an adjacent block, and an indirect object, the sno-bee that the penguin is trying to squash with the block. You might reasonably expect to see "the block that I am adjacent to" and "the sno-bee that I am trying to squash", to show up as part of the pattern for "kick". Even if you don't have a policy, just a classification system, knowing that "kick" is present in the dynamics of the domain, you might reasonably expect those subformulas to show up in a classification system associated with that domain.

A third lightweight way that "patterns hang together with actions" is a quality of similar granularity or scale among the patterns and actions. You can have a keystroke-level model (such as Card, Moran and Newell's "The keystroke-level model for user performance time with interactive systems"), or you can have a very abstract model (such as Cynefin's 2x2 + 1 categorization, the Red-Green-Refactor cycle, or Kent Beck's 4 rules of simple design), but mixing them and having some patterns refer to keystrokes and others refer to high-level concepts such as whether "the domain" is "complicated" or whether the current design "reveals intentions" would violate this compatible-scale rule.

What is the quality that makes a classification system typically unfunny? I am not completely certain, but my best attempt is:

Classification systems are shaped for purposes

This shaping leaves characteristic toolmarks on classification systems, even if the policy is excised or hidden. With different policies, they might be able to be used for a few different purposes; not just winning at a game, but also deliberately losing at that game, for example.

Funny classification systems are visibly not suited for purposes. Most clearly "it goes to the square hole" isn't helpful to anyone (because it always goes to the square hole). Furthermore, it is difficult to imagine a purpose that Borges's Benevolent Emporium system would be suited for. On the third hand, it's interesting to contrast two ways of patching "the three genders". A MECE patch is still slightly weird:

- I have no plans to purchase a vehicle.

- I do have plans to purchase a vehicle and I am a man.

- I do have plans to purchase a vehicle and I am a woman.

On the other hand, a teleological patch is perhaps successfully unfunny:

- I have no plans to purchase a vehicle.

- I do have plans to purchase a vehicle and I would like to watch the masculine-coded vehicle marketing next.

- I do have plans to purchase a vehicle and I would like to watch the feminine-coded vehicle marketing next.

The teleological patch blends some of the action that the website is likely going to take in response to the button being pushed into the classification system.

In conclusion, I have attempted to show that (unfunny) classification systems are deeply teleological. A process of adapting the classification system to a purpose can act like tightening a screw, causing operators to experience more and more "blinkering" or "acquired similarity" and eventually, catastrophic failures as described in "Seeing Like a State" and "Sorting Things Out".